Abstract: The aim of the paper is to provide the results of a survey conducted in 2020, which dealt with ethics of artificial intelligence. The opinions of 255 participants were obtained by means of a questionnaire and processed by chi-square (χ2) statistics, studying how important a role gender played in viewing selected aspects of ethics of artificial intelligence. The basic paradigms of the survey were based on theories of social constructivism; concerning moral issues, Kohlberg and Gilligan’s theory was used; with respect to ethical education, Adler’s understanding was referred to. Even though it was found that the influence of the participants’ gender did not play a significant role in all studied areas, differences were found in the awareness of machine learning, the ability to imagine people having a humanoid robot as their companion, entrusting artificial intelligence with people’s safety, and the level of comfort provided by artificial intelligence.

Keywords: Ethics. Artificial intelligence. Humanoid. Robot. Responsibility.

Introduction

It could be stated that, in the 21st century, information technologies have continued to advance in great strides and have been interwoven into each and every area of human life. A great number of innovations, new applications, and software are based on artificial intelligence (AI). The advancement of machines, which are effective learners and decision makers, continues to progress; it, however, also brings about some ethical concerns.

Man is a unique being (not only from the philosophical viewpoint), aware of himself as well as his environment. Through language, people can share their thoughts and express themselves by means of creativity, reflected in culture. By being aware of their existence, a person enters into a relationship with their environment, which both shapes and defines them. They are themselves, but they are also their environment. They constantly search for their existence and strive to alter it to give it sense. It is key that, when defining themselves, man is aware of their unique person defined against their environment. The definition of one’s ‘I’ takes place in a dialogue with ‘You’ or ‘They’ (cf. Buber, 2016). Understanding the self when meeting others and forming a relationship with them is a dialogue event, through which man manifests their existence, uniqueness, and individuality towards others. This can only happen in the presence of other people who also define themselves against each other. Interpersonal relationships placed in the sphere of contact between man and artificial intelligence can be understood as a dialogue between ‘We’ and ‘They’. When in the company of people, there are rules based on which we present our own personality and accept the personalities of others. However, with regard to an entity based on artificial intelligence, it might be necessary to define these relationships in a different way, so that clear rules are determined for cooperation and, possibly, in future, also mutual symbiosis. This is based on the assumption that humans think in the context of human society and its goods. The inhabitants of Earth have not yet encountered more intelligent beings than themselves; thus, there was no need to deal with relationships towards other intelligences. Nevertheless, viewing man and his relationship towards artificial intelligence in this way appears to be obsolete. Should the quantity of good in the form of “the greatest good possible for as many people as possible” (cf. Mill, 2001, 15-25 for more information) be considered, one must definitely take the side of man as a bio-psycho, and especially social, being aware of the quality and intensity of his own relationships.

Theoretical background

The overall conception of the present survey was based on the work of Bostrom (2014) and Pokol (2010, 2016, 2017, 2018) was used as the philosophical and ethical basis for the research questions. Another source was the Handbook of Algorithm Ethics (Pikus – Podroužek, 2019), as well as information obtained from the website of the KinIT- Kempelen Institute of Intelligent Technologies. Based on existing research into ethics of artificial intelligence, it could be claimed there are a great number of authors and institutions who study the above area from various viewpoints. What, however, appears problematic is a missing society-wide discourse, especially in such countries as China or Hong Kong. Naturally, the social system in the above countries plays a significant role, which might pose an obstacle to global agreement. Another problem is the speed at which AI advances, with the private sector being the fastest, which might cause difficulties in the area of such values as transparency, justice, privacy, responsibility, reliability, and sustainability of the environment if ethical rules of their use are vague or missing altogether. In Europe (and not only there), efforts for standardisation of ethical rules in this area are a priority. This is evidenced by such efforts as the publication ‘A white paper on artificial intelligence – European Approach to Excellence and Trust’ (2020), which was, in 2021, appended by the Regulation of the European Parliament and of the Council on Machinery Products (2021), replacing the original guidelines for machinery, with the aim of simplifying companies’ administrative load. At the same time, it is an effort to complete and simplify administrative processes for companies that use machinery and have implemented AI. The document elaborates on risks posed by AI and rates them from minimal, limited to high risk and unacceptable risk. In the Czech Republic, a self-contained legal framework for artificial intelligence was developed, entitled ‘Research into the potential development of artificial intelligence in the Czech Republic: An analysis of legal and ethical aspects of the development of artificial intelligence and their applications in the Czech Republic’ (2018), which deals with ‘superintelligence‘, as well as the issue of an electronic person and systems using similar bases. These documents were used as the basis for Section 1 of the present research. In Slovakia, the Kempelen Institute of Intelligent Technologies (studying artificial technologies) was founded, which interconnects the academic and the private sectors. Its working group deals with ethical issues of artificial intelligence.

The areas of AI, ethics of AI, relationships between man and machine are dealt with by a great number of authors, their research and results narrow in their focus, which is, considering the area, understandable. Ethics of algorithms is researched by Vieth and Wagner (2017), the area of human rights and artificial intelligence is studied by Marda (2014, 2018). Ienca (2019) deals with ethics and the administration of biomedical data, as well as ethically tuned AI and responsible innovations for emerging technologies where man and machine interface, cooperating, among others, with Anna Jobin (2016, 2019), who studies the interaction with systems and ethical AI. Such values as privacy, justice, and transparency are dealt with by Hustedt and Fetic (2020), while Hui (2018) focuses on new media and scientific communication, scientific and technological revolutions, as well as industrial transformations and the social influence and regulation of AI. Mann (2018) researches transnational crime, technologies used by the police, monitoring, and transnational online police forces. It is clear from the above brief overview of topics and issues studied within ethics of artificial intelligence that it is rather difficult to cover such a broad area, as artificial intelligence truly is, in one research study. The above authors inspired Sections 3 and 5 of the present research.

The study by Lima et al. (2020) deals with the topics that are the focus of the present survey, such as civil rights of humanoid robots based on artificial intelligence. Lima’s research tried to find out the respondents’ opinions on granting humanoid robots citizenship, as was the case with the humanoid robot Sophia. The issue was also touched on in the present paper. The study also observed changing the respondents’ attitudes towards artificial intelligence by means of pre-prepared information cards and the time they spent looking at them.

Fong, Nourbakhsh, and Dautenhahn (2003, 150) also work in this area and they classify the appearance of social robots according to their shape into zoomorphic, anthropomorphic, caricatured, and functional. In the relationship of man to robot, whose primary function is social interaction, appearance might play a significant role. Therefore, in the context of social interaction, the authors of the paper adhere to the above classification. The questionnaire inquired about the influence of an anthropomorphic depiction and realisation of a robot and the level of agreement with its appearance in various developmental and social stages (child, adult, partner). We also wanted to find out whether people could imagine various types of relationship between man and a humanoid robot. This was based on research by Samani (2011, 2013) into lovotics (love + robotics) using non-humanoid robots. He studied their emotional manifestations towards man by means of an artificial endocrine system and artificial hormones causing various types of moods towards man. The complexity of relationships between people suggests the vast number of problems in relationships between humans and humanoid robots based on artificial intelligence. Newton Fernando, Nakatsu, and Tzu Kwan Valino Koh (2013) also study social robots, while ethics, and the interconnection between robots and artificial intelligence is elaborated on by Bartneck, Lütge, Wagner, and Welsh (2021) in their publication ‘An introduction to ethics in robotics and AI’.

Non-anthropomorphic types of social robots are addressed by Hoffman and Ju (2014), who explore the possibilities of these types of robots in interaction with humans. In the context of lovotics, social robots are dealt with by Cooney, Nishio, and Ishiguro (2014), who work on a theory that could aid in increasing human happiness and social well-being, i.e., feelings of happiness, health satisfaction, and other forms of welfare. Lovotics can be considered an area that might, in future, explain and aid relationships between social robots and humans by studying human love and the ability of man to form relationships. Some aspects of this topic were also outlined in the present survey.

Emotions in robots raise many ethical questions regarding their existence, rights, obligations, etc. As the ethical aspect of the emotional system of robots is dealt with by Nitsch and Popp (2014), their research was used as the basis for the aforementioned Section 4.

The core paradigmatic basis

The present research was based on the social constructivism of human sexuality, not only manifested in one’s private life but intertwined in all areas of their endeavours. It is the paradigm defined in this way that helps us understand relationships towards artificial intelligence, humanoid robots, and their rights. Another starting point was the construction of moral development following Kohlberg and Gilligan’s concept. The third aspect was Adler’s understanding of ethical education. We consider Ethical competence to be an important basis for overall the human setup, especially in the approach to artificial intelligence.

Marková states that “[s]ocio-constructivist approaches are associated with such names as Gergen (1974) – who pointed out that the socio-psychological is essentially historical, and also to the role of social constructivism in shaping moral resources for the future (Gergen, 2001); Berger and Luckmann (1966/1999), Shotter and Gergen (1989), and many others united by the thesis of the social construct of reality. As Nightingale (1997) states, social constructivism requires one to admit that cognition is not a true reflection of objectively existing phenomena, but is created and maintained in social interaction” (Marková, 2012, p. 27). Marková understands social constructivism as “an understanding of sexuality as socially constructed. At the forefront, there is a cultural context in which sexualities are embedded, where one learns about them and where they are created, organised, and experienced. [...] Even though sexual science, similarly to other systems of cognition, is not neutral, criticism of ideology and scientific explanation are always the first steps to change. In this way, deconstruction helps in uncovering ideological prejudices and might change the balance of power and promote social change. Ideology, for instance, in the above-mentioned instinctual (male) sexuality and its necessary consequences, should be deconstructed in favour of unencumbered gender-related sexual relationships” (Marková, 2012, 31-32).

With regard to the above outline, we understand social constructivism as creating realities based on the language an individual uses and the group in which they occur. This explains the difference between understanding reality and the related rights and freedoms necessary for ethical artificial intelligence in different cultural and social contexts. These should not only be applied to the essential understanding of human men and women, but as a construct in the world of many realities. The men and women included in the research were, thus, not understood as carriers of the essence of biological sex but rather as constructs. In the present paper, artificial intelligence is understood in a similar way; when taking intelligent software into consideration, it may or may not be of various genders.

From the viewpoint of human moral development, the paper is based on the theories of Kohlberg and Gilligan (1963). However, their classification was not viewed from the male-female viewpoint; rather, humans are considered constructs with the opportunity to develop into a pro-social personality that undergoes change and develops in a certain way, be it in a female or male body. In spite of this, certain differences in the morality of men and women were observed, which was why possible reasons to explain them were looked for. It was the description of the moral development of women, following Gilligan (in Kakkori and Huttunen, 2010), that we referred to when considering the observed differences. Obviously, both theories have their shortcomings; nevertheless, the authors of the present paper relied on them when interpreting the results.

Regarding the goals of ethical education in Slovakia, the education of a prosocial person as a construct is the education of such a personality who can be beneficial for themselves and society. Based on Adler’s concept of ethical education, Marková states that “[o]n an individual level, ethical education should stimulate an one’s self-awareness and somewhat contribute to the understanding of their subjective view of themself, the world, and other people, as well as their understanding of moral decision-making and behaviour. Ethical education is to further encourage a person on the way to their moral self-construction. It is also to promote such attitudes that contribute to the respect of oneself [and] the accountability for one’s decisions […]. Ethical education should, thus, enable a creative individual to be on the useful side of life […]. Adler’s ethical education is guided by the idea of an ideal human community, which arises from the requirements of social coexistence […]. These two dimensions – individual and social – are not merely interconnected by a sense of belonging but also a sense of democratic values” (Marková, 2016, 8-9).

Research aim and methods

The aim of the survey was to find out to what extent the present-day population realises the importance, opportunities, and risks of artificial intelligence; its objectives reflecting the views of the respondents in the following areas:

- Artificial intelligence in general;

- Social relationships towards artificial intelligence;

- Responsibility and security;

- Law and civil freedom;

- Comfort versus loss of autonomous decision-making.

The present paper analyses the above areas as viewed by men and women involved in this study.

The method used for data collection was a questionnaire, distributed electronically in Slovak, Hungarian, and English. The above language versions followed the nationality composition of the Slovak Republic, where a numerous Hungarian ethnic group lives, for whom it is more convenient and natural to fill in the questionnaire in their mother tongue. The English version was provided due to global migration, following the assumption that Slovakia is also inhabited by non-native Slovak speakers who would find English easier to understand. Respondents from the Czech Republic were also approached, mainly due to linguistic affinity and also shared state history. Potential geographical overreach is considered as an advantage and an enrichment of the target group. Nevertheless, the primary focus was the population of Slovakia, or Slovak speakers living abroad.

Questionnaire is one of the most commonly used methods in pedagogical sciences. It is a method that enables the researcher to obtain, relatively quickly, collective information about the respondents’ knowledge of, opinions on, or attitudes towards topics that are current or potentially current. Given that the aim of the present survey was to verify the extent to which the present-day population is aware of the importance, possibilities, and risks of artificial intelligence, the method was considered appropriate. The researchers were aware of its weaknesses; in this case, there was no option to provide open answers, i.e. to express the respondents’ own opinions; merely indications of possibilities were provided. In order to, at least partially, eliminate this issue, the last item (a thank you note for the time spent filling in the questionnaire) provided the respondents with the space to write down their subjective views. At the same time, this served as feedback regarding ethics of artificial intelligence as such, rather than just the issues addressed by the questionnaire. Another shortcoming of the used method was the fact it was impossible to influence the number of respondents, as the questionnaire was distributed electronically. On the other hand, respondents of a wide age range were reached, which was perceived as a benefit. The time necessary to complete the questionnaire (approximately 15 minutes) was also seen as potentially problematic, which might have demotivated potential respondents. Due to the low number of answers obtained, the present survey cannot be considered representative and, therefore, the conclusions and generalisations only apply to the target group, rather than the whole population.

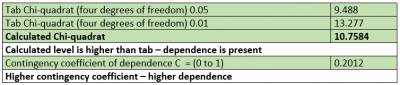

The obtained results were statistically processed and the dependence between qualitative features was calculated. The following methods were used to verify the dependence of features A and B:

- χ2 statistical test for association applied to 2 x 2 tables;

- Fischer’s Exact Test applied to 2 x 2 tables involving small numbers;

- χ2 test applied to k x m contingency tables as a method used for non-parametrical testing.

From the viewpoint of the level of statistical dependence, it was divided according to the contingency coefficient of dependence from 0 to 1 as follows:

0.0 – 0.2 – low dependence

0.3 – 0.6 – medium dependence

0.7 – 0.9 – high dependence

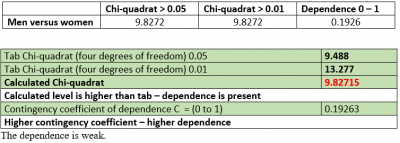

Table 1: Calculations of Chi-quadrat and contingency coefficient of dependence

The views of 79 men (31%) and 176 (69%) women were statistical processed (in total, 255 respondents).

By statistical processing, the researchers aimed to find the answer to the following research question: Does the gender of the respondents influence their views on ethics of artificial intelligence?

In order to arrive at an answer to the research question, two hypotheses were formulated and tested in each item of the questionnaire in terms of gender (in this case, male and female). Other gender was not included in the statistical evaluation, as only one respondent indicated they were neither male nor female.

The following hypotheses were formulated:

H0: The gender of the respondents does not affect their views on ethics of artificial intelligence.

H1: The gender of the respondents influences their views on ethics of artificial intelligence.

The questionnaire included closed and open questions, which were used to find out demographic data and other data defining the characteristics of the target group (the first nine questions). These were followed by closed multiple-choice questions, each with five possible options, namely: 1. Definitely, 2. I think so, 3. Not sure, 4. I don’t think so, 5. Definitely not. These were divided into five sections, each containing eight questions:

- Artificial intelligence in general;

- Social relationships towards artificial intelligence;

- Responsibility and security;

- Law and civil freedom;

- Comfort versus loss of autonomous decision-making.

Target group

A total of 256 adult respondents took part in the research. Even though the participants filled in different language versions of the questionnaire (Slovak, Hungarian, and English), they were considered a compact target group and the variable that was studied was the gender they ticked, as it was the potential differences in the views of the men and women approached that were observed. The predominant gender of the total number of 256 respondents was female, represented by 69% (176) of the respondents, while there were male 31% (79 male) participants. The respondents’ ages ranged from 18 to 50 and their level of education varied from completed primary education to the third degree of university, the majority of the respondents having completed secondary school or graduated from university.

Results and discussion

The questions included in Section 1 focused on general awareness of the respondents regarding artificial intelligence. They were asked the following questions:

- Have you ever come across the term ‘artificial intelligence’?

- Would you be able to define the term ‘artificial intelligence’?

- Do you agree that artificial intelligence is part of our lives?

- Do you agree with the statement that “artificial intelligence influences our lives”?

- Do you use intelligent appliances?

- Do you agree with the statement that “artificial intelligence is our inevitable future”?

- Have you ever heard of ‘machine learning’?

- Have you ever heard of the term ‘algorithm’?

H0 was confirmed for Questions 1 – 6 and 8, while Question 7 was in keeping with H1. Therefore, the gender of the respondents involved in the present survey influenced their views regarding ethics of artificial intelligence (cf. Table 2).

Table 2: The results of Chi-quadrat calculations for Section 1, Question 7 – awareness of machine learning

As many as 50% of the men ticked ‘Definitely’ when asked whether they had heard of ‘machine learning’, while 19% stated they thought so. 10% of the male participants were not sure, while 18% thought they had not and 3% of the asked men believed they definitely had not. In contrast, 32% of the female respondents stated they might have heard about it while 26% definitely had. 14% of the women were not sure and 18% of them thought they had not while 9% were sure they had not heard of such a concept. The difference in the percentages in the male and female respondents is obvious.

Section 2 ‘Social relationships towards artificial intelligence’ enquired about the following:

- Would you be able to imagine people living on their own (and feeling lonely) having a robot/humanoid for a companion?

- Would you be able to talk to a robot/humanoid about your feelings?

- If you were able to purchase a personal robot, would you like it to be as human-like as possible?

- Would you be able to imagine a robot as your partner?

- Would you be able to imagine a robot as your child?

- Would you be able to imagine robots (humanoids) as your family?

- Would you be able to imagine a robot (humanoid) having feelings?

- Would you be able share your feelings with a humanoid robot?

H0 was confirmed for Questions 2 – 8, while H1 was confirmed in Question 1. This suggests that the gender of the research participants had an influence on their views on ethics of artificial intelligence. Question 1 enquired about the respondents’ ability to imagine people living on their own (and feeling lonely) having a robot/humanoid for a companion.

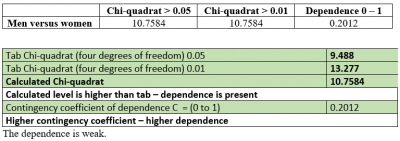

Table 3: Section 2, Question 1 – ability to imagine lonely individuals owning a humanoid robot as a companion

The results showed that, out of the 79 asked men, 32% chose the option ‘Definitely’, while 24% stated they thought they were able to imagine individuals living on their own having a robot companion. 15% of the approached males were not sure. Rather negative were the responses of 14% of the male respondents, who ticked the option ‘I don’t think so’ and the same percentage (14%) stated they were definitely not able to conceive such an idea. When comparing the above results with those provided by the female participants, the differences are obvious. Out of 176 of the asked women, only 15% selected ‘Definitely’, while 34% stated they thought they could do so. 16% of the female participants claimed they did not know how they felt, while 13% stated they did not think they were able to imagine such a situation and 22% claimed their definitely could not. Combined, the options ‘Definitely’ and ‘I think so’ were selected by 56% of the men, and only 49% of the women approached. It follows that, in the issue of care and family bonds or leaving these to artificial intelligence, the men were more ready than the women to accept the idea. The question arises why it is so. As Gilligan (2001) proposes, women’s levels of empathy and social feeling tend to be better developed, which could have caused the difference between the answers of the male and female respondents.

According to the population curve in the Slovak Republic, the population is aging and an increasing number of old people, combined with a very small number of births, is to be expected. This would mean that, with low reproduction, the aging of the population in the Slovak Republic would be highly significant, which would also bring about increased demands on the care for seniors. As presumed by Bleha, Šprocha, and Vaňo (2013, 63), “the situation in 2060 will significantly differ from the year when the prognosis was stated. The position of the post-productive age population will change the most, transforming from the least numerous to the most numerous main age group in less than four decades. The population of the two youngest age groups will continue to decline throughout the period the prognosis concerns. When comparing the initial and final ratio of the population, the number of the inhabitants aged 45 to 64 will change the least. An interesting feature of the expected development is the fact that in the period around 2045, up to three out of the four main age groups will have the same population. These are all major age groups except for the youngest age group. It is also a period when the oldest age group of the population will become the most numerous of all the main age groups”. In 2020, when the total population of the Slovak Republic is 5,459,781, there was a natural increase of 2439 children (Slovenská republika v číslach [Slovak Republic in numbers], 2021, 19).

It is not merely health care that is concerned, but also social care for a person. In this context, a problem might arise, as it is possible that the productive part of the population will not be able to spend time with seniors. As inspiration, Japan might be of interest, where they face the same issue and are most likely to face it in an even larger extent (cf. Ekonomická informácia o teritóriu: Japonsko – všeobecné informácie o krajine [Economic information regarding the territory: Japan – general information about the country], 2020). It is true that the technological level of Japan cannot be compared to that in Slovakia. Still, it is possible for professionals in the area in question to follow the emerging utilisation of social robots in Japan and consider their possible implementation in the future development of Slovakia. What might also be an issue is the cultural (and religious) difference between the two countries, which might significantly influence people’s views on advanced human-like technology entering their lives on an everyday basis. Moreover, according to Gluchman (2018, 22), “we have a duty to promote good, but this obligation is limited to the suitability and eligibility of the means by which this can be done”. Therefore, one must ask whether providing seniors with artificial companionship is the best society can do and the ethical nature of ‘human-humanoid’ relationships must be addressed. There are several questions to be asked: Is it moral to provide lonely people with an artificial instead of human companion? Will relationships between generations be impoverished? Is it morally acceptable to merely use artificial intelligent entities as means of human reasoning and endeavours? Will humans be able to reciprocate feelings towards the artificial intelligence of humanoid robots?

In Section 3 ‘Responsibility and security’ of the questionnaire that the respondents were asked to fill in, the following questions were asked:

- Would you allow artificial intelligence to collect your personal data aimed at your security?

- Would you be OK for a driverless car to drive you places without the possibility of your interference?

- Ethical nature means that artificial intelligence should adhere to certain rules, such as respecting your security, protecting your personal data, not influencing your decision making, etc. Based on these attributes, do you agree that programmes using artificial intelligence should have a label of ethical nature that would inform you to what extent you are protected?

- Would you be able to imagine artificial intelligence keeping you protected?

- Would you be able to imagine artificial intelligence deciding on your protection?

- Would you allow artificial intelligence to infringe on your privacy by means of internet apps in order to protect you?

- Would you allow the use of face recognition technologies providing security in public spaces?

- Would you allow artificial intelligence to monitor and record your activities without your approval (such as tracking your movements and leisure time activities by means of video cameras, drones, GPS, phone apps, search engines)?

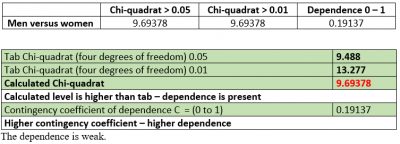

H0 was confirmed for Questions 1 – 4 and 6 – 8, while H1 was confirmed in Question 5. This suggests that ethics of artificial intelligence was viewed by the target group through the lens of their gender (cf. Table 4).

Table 4: Section 3, Question 5 – artificial intelligence deciding on one’s protection

In Question 5, significant differences were observed between the responses of the male and female participants. As many as 27% of the asked men stated they were sure they could imagine artificial intelligence deciding on your protection, while 2% of them said they thought they could. 27% could not decide and 15% of the male participants did not think they would be able to conceive of the idea and as many as 29% claimed they definitely could not. On the other hand, merely 1% of the female respondents stated they were definitely able to imagine artificial intelligence to be of service when it comes to their protection, while 14% claimed they might be able to do so. Similarly to the male part of the target group, 27% of the women could not decide, while 25% stated they did not think they could and 33% definitely would not entrust artificial intelligence with their safety. In other words, most of the men that were approached would feel safe, or they could not decide, while a majority of the asked women would not feel sufficiently protected by decisions made by artificial intelligence. Once again, it could be assumed that these differences of opinion within the target group of the present survey might have been caused by the development of moral awareness, which, according to Gilligan (2001), differs in men and women, mainly in the social area and empathy. At the same time, it is possible that the women considered the risks of human error when dealing with intelligent systems.

Section 4 ‘Law and civil freedom’, understood as a possible outline of the relationships between We and They, comprised the following questions:

- If robots/humanoids were to have their own consciousness, should we have the moral right to get rid of them, return, or destroy them in the case of their failure or the loss of their full function?

- Would you agree for artificial intelligence to have its own consciousness?

- Have you ever heard of the female robot-humanoid Sophia?

- Did you know that the humanoid Sophia was granted honorary citizenship of Saudi Arabia?

- Would you agree for similar humanoids to be given citizen’s rights?

- If robots/humanoids were to have citizen’s rights, should they also have the right to vote?

- Should humanoids have the code of ethics in their software?

- If robots/humanoids were to have their own consciousness, should they also have their own rights and duties towards civil society?

H0 was confirmed for all the questions included in Section 4. The gender of the research participants did not influence their views on ethics of artificial intelligence.

The following questions were asked in Section 5 ‘Comfort versus loss of autonomous decision making’:

- When entering key words, would you be OK for artificial intelligence to search for web pages based on the information collected by cookies? (Cookies are files that collect information about you based on which they create a specific profile and offer services, products, and advertisements that could make your life and decision-making easier.)

- The vision of the project Neuralink (which is currently being developed) is to help people who are in wheelchairs start to walk again, by means of a brain chip. Another vision is to connect the human brain with a computer and artificial intelligence, based on which one could express ideas by means of telepathy. Would you be OK with a chip in your brain enabling you to communicate by means of telepathy, to faster acquire languages, record memories, provide infrared or X-ray vision, etc. at the expense of a certain loss of privacy when transferring thoughts that could be monitored?

- Do you agree with artificial intelligence creating, without you agreeing to it, an order of your preferred web pages and, at the same time, change the order based on its own statistics of your visiting the websites?

- Do you agree with the statement that by frequent search for information of a certain type, such as inserting key words, search engines would preferentially offer such one-sided information, which could influence your viewpoint and decision making?

- Do you agree with the fact that, in this way, specific information about you and your needs and preferences is created without the possibility of you interfering?

Do you feel that creating a specific profile of your personal needs, preferences, and contacts increases your comfort? - Do you agree that, by accepting cookies while using the internet, artificial intelligence can use advertising to influence your needs, interests, and preferences?

- Do you agree that artificial intelligence increases your comfort?

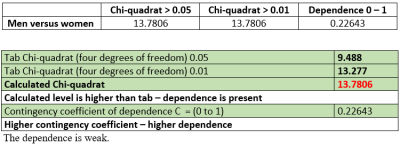

H0 was confirmed for Questions 1 – 7, while H1 was confirmed for Question 8, meaning the gender of the respondents had an influence on their views on ethics of artificial intelligence.

Table 5: Section 5, Question 8 – artificial intelligence providing comfort

When asking the target group whether they agreed with the idea that artificial intelligence increased their comfort, 25% of the men approached claimed they definitely did, while 39% stated they thought so. 19% of the male respondents could not decide and 13% of the asked men said they did not think so, while 4% stated that definitely was not the case. On the other hand, only 10% of the female participants ticked the option ‘Definitely’, even though as many as 43% stated they thought so. 26% were not sure, while the same percentage of the women approached stated it might not or it definitely does not increase their comfort (10% each). As can be seen, many more men in the present target group (64%) agreed that artificial intelligence provides increased levels of comfort than was the case for the women (53%).

Conclusions

Based on the present research into ethics of artificial intelligence (with the data applicable to the target group approached for the purposes of the survey), the following has been found. The gender of the participants did not seem to have a major influence on their views on ethics of artificial intelligence in any of the observed areas (artificial intelligence in general, social relationships towards artificial intelligence, responsibility and security, law and civil freedom, comfort versus loss of autonomous decision-making).

Nevertheless, a certain level of influence of gender was observed in specific aspects that the participants were enquired about. The most significant differences were found in the awareness of the existence of machine learning, since as many of 69% percent of the asked men in comparison to 58% of the approached women were aware of its existence. Another area where a significant difference was recorded was the ability to imagine people who live on their own (and feel lonely) having a humanoid robot as their companion. In this context, more men (56%) claimed they were definitely, or at least possibly, able to imagine such companions being utilised, while only 49% of the women involved stated the same. Different levels of empathy as well as social feeling might be at play here. Similarly, a higher percentage of the men (29%) would entrust artificial intelligence with their safety, while a much lower proportion of the female respondents (15%) would be happy to do so. The last issue which appeared to be perceived differently by the two genders involved in the present survey was the increased comfort that artificial intelligence provides. As many as 64% of the male participants, in comparison to 53% of the women approached claimed they felt intelligent machines did so.

It seems there are areas to which the men and women involved in the present research responded differently. Even though the findings only apply to the target group in question, ethical competences are a highly important factor in the development of human society. Thus, it could be concluded that mere ability to reproduce information is not sufficient, it also needs to be placed in a society-wide framework. Without the ability to openly communicate, empathise, be responsible and prosocial, humans might consider artificial intelligent systems to be a great danger. This could be addressed by constantly improving people’s ethical competences by means of courses of ethics in all areas of human society. The creation of enforceable codes of ethics for both the manufacturers and developers of intelligent systems for business, and their users, seems to be key.

From the viewpoint of society as a whole, a code of ethics at the level of governments and their subordinates is necessary, which would guarantee the enforceability of human dignity, privacy and security in the public sphere. Ethics is an important part of the education system, especially the area of education to responsibility, a broader understanding of the overall impact of intelligent systems on an individual’s morality, as well as society and its moral development.

Authors:

PhDr. Jana Bartal, PhD.

Szlovák Tanítasi Nyelvü Óvoda (Hungary)

Mgr. Eva Eddy, PhD.

University of Prešov (Slovakia)

References

A White Paper on Artificial Intelligence – European Approach to Excellence and Trust [February 2020] pdf. [online] [Retrieved September 10, 2021]. Available at: https://ec.europa.eu/info/publications/white-paper-artificial-intelligen...

BARTNECK, CH. – LÜTGE, CH. – WAGNER, A. – WELSH, S. (2021). An Introduction to Ethics in Robotics and AI. Springer, ISBN 978-3-030-51110-4 (eBook)

BLEHA, B. – ŠPROCHA, B. – VAŇO, B. (2013). Prognóza populačného vývoja Slovenskej republiky do roku 2060. Infostat Inštitút informatiky a štatistiky Bratislava, Available as a pdf at: Prognoza2060.pdf

BOSTROM, N. (2014). Superintelligence. Oxford university Press, ISBN 978–0–19–967811–2

BUBER, M. (2016). Já a Ty. Praha: Portál, 2016. 128 p. ISBN 978-80-2621-0931

COONEY M. D. – NISHIO, S. – ISHIGURO, H. (2014). Designing Robots for Well-being: Theoretical Background and Visual Scenes of Affectionate Play with a Small Humanoid Robot. Lovotics 2014, 1:1 http://dx.doi.org/10.4172/2090-9888.1000101

FERNANDO, O. N. N – NAKATSU, R. – KOH, J. T. K. V. (2013). Cultural Robotics: The Culture of Robotics and Robotics in Culture. In: International Journal of Advanced Robotic Systems, Volume 10, 400. Available at: www.intechopen.com

FONG, T. – NOURBAKSHS, I. – DAUTENHAHN, K. (2003). A survey of socially interactive robots. In: Robotics and Autonomous Systems 42 (2003) pp. 143–166, ISSN 0921-889

GILLIGANOVÁ. C. (2001). Jiným hlasem: O rozdílné psychologii žen a mužů. Praha: Portál. ISBN 80-7178-402-8.

GLUCHMAN, V. (2018). Kant and Consequentialism (Reflections on Cummiskey's Kantian Consequentialism). In: Studia Philosophica Kantiana. 1/2018, pp. 18 – 29 [online] [Retrieved January 5th, 2022]. Available at: https://philarchive.org/archive/GLUKAC

HOFFMAN, G. – JU, W. (2014). Designing Robots with Movement in Mind, Journal of Human-Robot Interaction, Vol. 3, No. 1, pp. 89-122, doi: 10.5898/JHRI.3.1

IENCA, M. (2019). Intelligenza². Per un'unione di intelligenza naturale e artificiale. Rosenberg & Sellier, Tourin, Italy

JOBIN, A. – IENCA, M. – VAYENA, E. (2019). Artificial Intelligence: the global landscape of ethics guidelines 2019, [online] [Retrieved September 10, 2021]. Available at: https://arxiv.org/ftp/arxiv/papers/1906/1906.11668.pdf

JOBIN, A. – BILAT, L. (2016). Les services numériques grand public et leurs utilisateurs: Trois approaches sociotechniques contemporaines. A contrario. Lausanne: BNS Press. 22(1)

HUI, L. (2018). Industrial Innovation and Competition Map (third series), Available at: http://eng.siss.sh.cn/c/2018-10-25/555968.shtml

HUSTEDT, C. – FETIC, L. (2020), From principles to practice: How can we make AI ethics measurable? dostupné: https://ethicsofalgorithms.org/category/publications/2. April 2020

KAKKORI, L. – HUTTUNEN, R. (2010). The Gilligan-Kohlberg Controversy and Its Philosophico-Historical Roots. In: BESLEY, T., GIBBONS, A., ed. The Encyclopaedia of Educational Philosophy and Theory. 2010. Available at: http://eepat.net/doku.php?id=the_gilligan_kohlberg_controversy_and_its_p... ophicohistorical_roots

Kempelen Institute of Intelligent Technologies, Available at: https://kinit.sk

KOHLBERG, L. (1963). The Development of Children’s Orientations Toward a Moral Order I. Sequence in the Development of Moral Thought. Vita Humana, 6, 1963 pp. 11-33 Available at: https://www.jstor.org/stable/26762149

LENGDEN, B. (2021). New Study Finds 40 Percent of People Would Have Sex with A Robot 2021, [online] [Retrieved December13, 2021]. Available at: https://www.ladbible.com/entertainment/weird-study-claims-40-of-people-w...

LIMA, G. – KIM, CH. – RYU, S. – JEON, CH. – CHA, M. (2020). Proceedings of the ACM on Human-Computer Interaction Volume. Volume 4, Issue CSCW2 October 2020, Article No.: 135pp 1–24 https://doi.org/10.1145/3415206

LOCKWOOD, G. – MARDA, V. (2014). Harassment in the Workplace: The Legal Context, “Jurisprudence”, The International Association of Law and Politics, September 2014. ISSN 1392-6195 (information about the author and her publications) [online] [Retrieved December13, 2021]. Available at: http://vidushimarda.com/writing/

MARDA, V. (2018). Artificial Intelligence Policy in India: A framework for engaging the limits of data-driven decision making, Philosophical Transactions A: Mathematical, Physical and Engineering Sciences, October 2018, doi: 10.1098/rsta.2018.0087, Available at: http://vidushimarda.com/writing/

MARKOVÁ, D. (2012). O sexualite, sexuálnej morálke a súčasných partnerských vzťahoch. Nitra: Garmond, ISBN 978-80-89148-76-9

MARKOVÁ, D. (2016). Etická výchova v Adlerovskom prístupe. Nitra: Garmond, ISBN 978-80-89703-27-2

MILL, J. S. (2001). O politickej slobode. Bratislava: Kalligram, 2001. 175 p. ISBN 80-7149-405-4

Ministerstvo zahraničných vecí a európskych záležitostí Slovenskej republiky: Ekonomická informácia o teritóriu Japonsko všeobecné informácie o krajine, 2020 [online] [Retrieved December 13, 2021]. Available at: www.mzv.sk/tokio, emb.tokyo@mzv.sk

NITSCH, V. – POPP, M. (2014). Emotion in robot psychology, Biologial Cybernetics, doi: 10.1007/s00422-014-0594-6; Also available at: www.researchgate.net/publication/260298491_Emotions_in_Robot_Psychology

PIKUS, M. – PODROUŽEK, J. (2019). Príručka etiky algoritmov. Bratislava, Available at: http://www.e-tika.sk/

POKOL, B. (2010). Morálelméleti vizugalódások. Budapest: Kairosz

POKOL, B. (2016). A kollektív szuperintelligencia elmélete. Jogeleméleti Szemle, XVII. évf. 3 szám, pp. 122-132

POKOL, B. (2017). Mesterséges intelligencia: egy új létréteg kialakulása? Információs Társadalom 2017/1.sz.

POKOL, B. (2018). A mesterséges intelligencia társadalma. Budapest: Kairosz

RAJNEROWICZ, K. (2021). Will AI Take Your Job? Fear of AI and AI Trends for 2021, [online] [Retrieved September 13, 2021]. Available at: https://www.tidio.com/blog/ai-trends/

SAMANI, H. A. – CHEOK, A. D. – THARAKAN, M. J., et al. (2011a). A Design Process for Lovotics. In: Springer, Human – Robot Personal Relationships, Springer LNICST series, Volume 59, 118-125, 2011.

SAMANI, H. A. – CHEOK, A. D. (2011b). From Human – Robot Relationship to Robot – Based Leadership. In: 2011 IEEE International Conference on Human System Interaction, HSI 2011.

SAMANI, H. – SAADATIAN, E. – PANG, N., et al. (2013). Cultural Robotics: The Culture of Robotics and Robotics in Culture. International Journal of Advanced Robotic Systems, Volume 10: 400. doi: 10.5772/57260.

SMITH, M. – MANN, M. – URBAS, G. (2018). Biometrics, Crime and Security. Routledge, 2018. 134 p. ISBN: 978-1138742802, eBook ISBN: 9781315182056

Štatistický úrad Slovenskej republiky: Slovenská republika v číslach (2021), Available at: SR_v_cislach_2021.pdf

VIETH, K. – WAGNER, B. (2017). How algorithmic processes impact opportunities to be part of society Discussion paper Ethics of Algorithms, Available at: https://ethicsofalgorithms.org/2017/06/02/calculating-participation-how-...

Výzkum potenciálu rozvoje umělé inteligence v České republice Analýza právně-etických aspektů rozvoje umělé inteligence a jejích aplikací v ČR, Available at: AI-pravne-eticka-zprava-2018_final.pdf